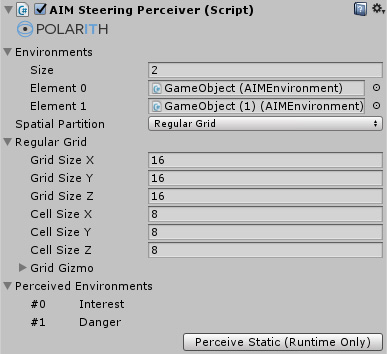

front-end: AIMSteeringPerceiver

inherits from: AIMPerceiver<T>

The Steering Perceiver is used to group sets of Environment components and to extract their percept data (relevant to steering algorithms) in order to make this information available to agents and their corresponding behaviours. Furthermore, the Steering Perceiver is able to utilize spatial structures for ensuring fast access to those percepts that are actually relevant for an agent. In order to save performance, it is highly recommended to use this feature as much as possible to organize and optimize your scene instead of using the direct input of GameObjects within behaviours.

The data of every game object which is implicitly referenced by a Steering Perceiver is extracted during every main loop update (except the corresponding environment is marked as static). This is done in a smart way: The data is extracted only if the corresponding object is relevant to at least one agent behaviour. Expensive allocations are done only if the element count of an Environment exceeds the capacity of the internally used memory. This may happen when a lot of new objects are spawned at once.

More detailed information about the perception pipeline is given by the corresponding manual pages.

This component has got the following specific properties.

| Property | Description |

|---|---|

Environments | Manages a set of Environments. The labels of the plugged in environments are listed below under Perceived Environments. If the perceiver is referenced within a Steering Filter, these labels can be used in behaviours to access the corresponding percept data. |

SpatialPartition | Defines what type of spatial structure is used to optimize the performance. Every type has its own advantages and disadvantages (see below). |

No spatial structure is used. The consequence is that the Steering Filter of every agent iterates all percepts. A percept is passed to the agent's behaviours if the objects satisfy a distance check (see the Steering Filter component reference).

Pro:

Contra:

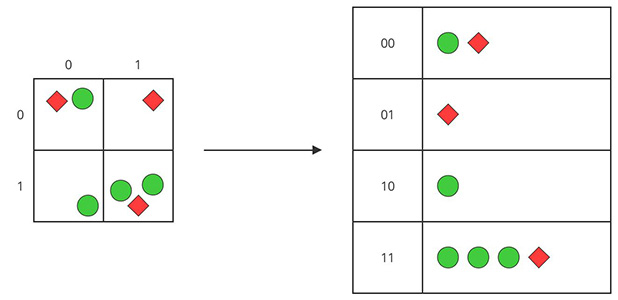

A regular grid structure is used to perform spatial hashing. Objects on the grid are mapped to the enclosing cell such that only objects in grid cells near the agent are iterated instead of iterating all objects in the scene. This can save a lot of expensive operations depending on the grid resolution and the distribution of the objects in the scene.

It is alway woth to use a regular grid since, in the worst case, it performs as good as none spatial parition.

Figure 1: Illustrates the concept of the Regular Grid. Every object is mapped to a list based on the cell it is located in. Instead of iterating all objects, an agent just has to iterate the lists of relevant cells.

Pro:

Contra:

The Regular Grid has got the following specific sub-properties.

| Property | Description |

|---|---|

CellCountX | Defines the number of cells along the x-axis, needs to be at least 1. |

CellCountY | Defines the number of cells along the y-axis, needs to be at least 1. |

CellCountZ | Defines the number of cells along the z-axis, needs to be at least 1. |

CellSizeX | Defines the cell size along the x-axis in Unity units, needs to be greater than 0. |

CellSizeY | Defines the cell size along the y-axis in Unity units, needs to be greater than 0. |

CellSizeZ | Defines the cell size along the z-axis in Unity units, needs to be greater than 0. |

GridGizmo.Enabled | If enabled, the grid is displayed in the scene view. |

GridGizmo.Outline | If enabled, only the outer boundaries are displayed. |

GridGizmo.Color | Controls color of the grid visualization. |

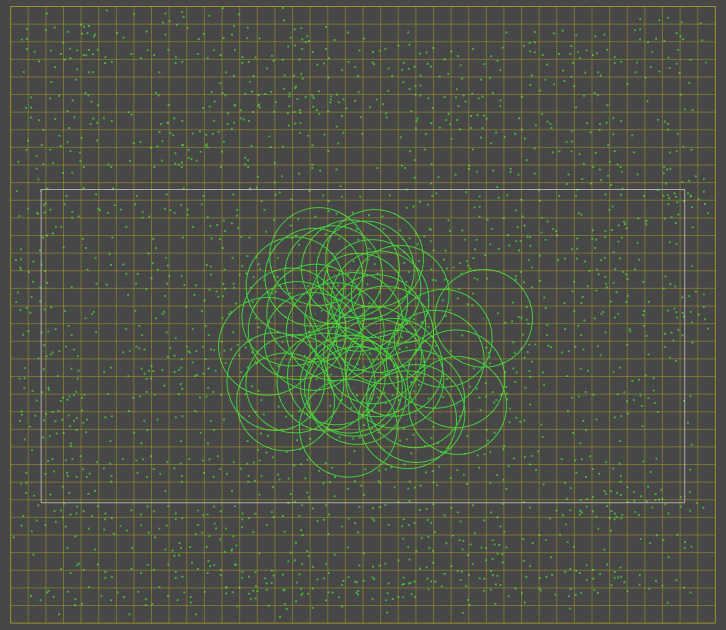

The following example should help you to get a better understanding which structure might be the best choice for your scenario. The values were measured with an quite old Intel i5-2500K processor. The scenario: 40 agents and n dynamic objects. Every agent has got the following paramterization:

The agents are equally distributed in a sub-area of the level (which means that not everything is relevant to them), and so are the dynamic objects in the level as well. The level has a size of 360x315 Unity units (see Figure 2). The results are displayed in the following table.

Figure 2: Example scenario with 2000 objects. The green circles represent the perception range of every agent.

| Spatial Partition | Range | Objects | Time |

|---|---|---|---|

| None | -1 | 500 | 35 ms |

| None | -1 | 1000 | 63 ms |

| None | -1 | 2000 | 124 ms |

| None | 25 | 500 | 10.3 ms |

| None | 25 | 1000 | 15 ms |

| None | 25 | 2000 | 24.5ms |

| Regular Grid | 25 | 500 | 8.9 ms |

| Regular Grid | 25 | 1000 | 10.8 ms |

| Regular Grid | 25 | 2000 | 17.5 ms |

Note, additional inbuilt mechanics like load balancing and multithreading can push the performance even further: For example, approximately 11 ms are needed for 1000 objects using a regular grid. These could be distributed on 6 frames with an update frequency of 10 and multithreading would then make the workload vanishing away completely.

For a better overview about the provided numbers above and their significance, we write them as percentages in the following table using the worst case as 100%.

| Spatial Partition | Range | Objects | Performance |

|---|---|---|---|

| None | -1 | 1000 | 100% |

| None | 25 | 1000 | 24% |

| Regular Grid | 25 | 1000 | 17.2% |

The button Perceive Static is used to update static environments at runtime. This might be useful for debugging purposes in the editor. Note that this can also be done via code with PerceiveStatic if you want to make use of this functionality at runtime (outside the Unity editor).

By using our extendable API, it is possible to write your own Steering Perceiver and Steering Filter to define individual conditions for providing relevant percepts. As the sections above describe, a successful strategy for increasing the performance is achieved by letting agents perceive only the things they really need.